ELEGANT – 6G-OpenLab: A unique infrastructure as a real-world testbed for 6G applications

October 29, 2024

MOST: A unique hybrid device to generate electricity and store thermal energy in an efficient and sustainable manner

November 25, 202422/11/2024

Energy consumption in training and inference of artificial intelligence (AI) models, such as large language models (LLMs) like GPT-4, has become a critical challenge due to its environmental impact and the costs associated with high-performance computing (HPC). The energy required to train and interact with these models increases significantly as their size and complexity grow.

Training an AI model involves feeding its neural networks with large volumes of data and performing multiple iterations to optimise the model’s parameters and enhance its results. These tasks require substantial processing power, leading to considerable electricity consumption. According to Schwartz et al., the computational costs of training machine learning (ML) models increased 300,000-fold in just six years (2013–2019), doubling every 3.4 months. More recent studies indicate that these costs continue to rise at a rate of 2.4 times per year since 2016. If we focus solely on AI systems and consider an intermediate scenario, by 2027, data centres and servers hosting AI models are projected to consume between 85 and 134 terawatt hours (TWh) annually. This figure is comparable to the yearly electricity consumption of countries such as Argentina, the Netherlands, or Sweden and accounts for approximately 0.5% of current global electricity consumption.

However, to fully understand the environmental impact, it is essential to take a holistic view of the ML ecosystem, beyond just model training, and include the operational carbon footprint of ML. Inference - using a trained model to make real-time predictions - can also consume significant energy, particularly in large-scale applications. While the energy consumption for a single inference is much lower than for training, the widespread and continuous use in environments like customer service systems, content generation, or autonomous driving can result in a greater cumulative energy impact. In fact, for services like ChatGPT, inference has been identified as the primary driver of emissions, producing 25 times the carbon emissions required for its training in a single year, according to Chien et al.

This high energy consumption has several implications:

- Economic Costs: Training large-scale models is expensive due to the substantial hardware requirements and the energy needed to power data centres.

- Environmental Impact: Prolonged training with extensive electricity use contributes to carbon emissions, especially when non-renewable energy sources are involved, thereby challenging AI’s sustainability.

- Optimisation: Developing more efficient training and inference methods is crucial, including model optimisation, specialised hardware such as GPUs and TPUs, and strategies like federated learning and model compression.

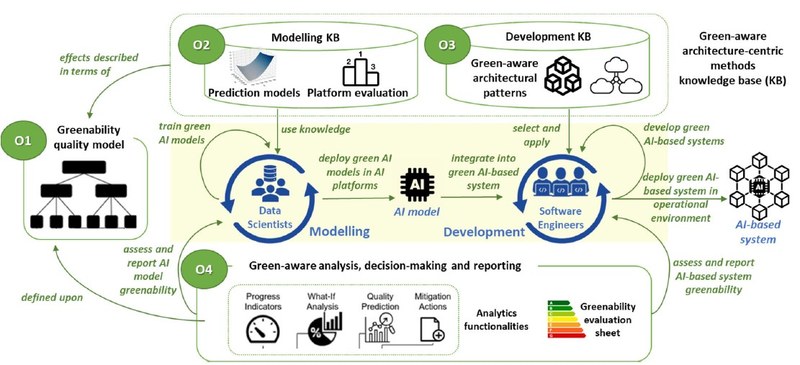

In this context, the Software and Service Engineering Group (GESSI) at the Universitat Politècnica de Catalunya - BarcelonaTech (UPC) is developing GAISSA, a project that has led to the creation of the GAISSALabel software tool. This energy card evaluates the energy efficiency of any machine learning model during both the training and inference phases, making it particularly valuable for HPC services. GAISSALabel can assess the footprint of machine learning models, helping improve efficiency, reduce operational costs, and shorten computation times.

GAISSALabel will enable AI model developers to:

- Monitor energy consumption and generate detailed reports.

- Identify areas for improvement to reduce energy consumption during the programming of ML model training and inference.

- Optimise models to make them more efficient in terms of time and resources.

By following the refactoring recommendations provided by GAISSALabel, ML engineers can enhance the energy efficiency of ML systems by up to 50%. This improvement not only leads to economic savings (e.g., lower electricity bills for data centres and HPC facilities), but also reduces the environmental impact of AI applications.

GAISSALabel offers solutions at three levels:

- Social: Promoting commitment to the energy efficiency of ML systems and empowering end users to select and use sustainable ML solutions.

- For ML software providers: Providing the necessary resources to develop sustainable ML systems, supported by management and reporting tools aligned with regulatory requirements.

- Individual: Assisting data scientists and ML engineers in understanding and managing energy efficiency in production by raising awareness and providing insights into the energy consumption of ML systems and tools.

The project began in December 2022 and is scheduled to conclude in September 2025, with a total budget of €277,035.00. It is funded by the Ministry of Science and Innovation and Universities, along with contributions from the Next Generation EU Funds.

Sector

You want to know more?

Related Projects

- The Image and Video Processing Group (GPI), part of the IDEAI-UPC research group, and the Digital Culture and Creative Technologies Research Group (DiCode) from the Image Processing and Multimedia Technology Center (CITM) at the Universitat Politècnica de Catalunya – BarcelonaTech (UPC), have co-organised the AI and Music Festival (S+T+ARTS) together with Sónar+D and Betevé, to explore the creative use of artificial intelligence in music.

- The Visualisation, Virtual Reality and Graphic Interaction Research Group (ViRVIG) at the Universitat Politècnica de Catalunya - BarcelonaTech (UPC) has participated in the XR4ED project, an initiative that connects the educational technology (EdTech) and Extended Reality (XR) sectors, with the aim of transforming learning and training across Europe.

- The inLab FIB at the UPC has collaborated with Lizcore® for the development of a proof of concept based on artificial intelligence to improve safety in climbing with autobelay devices. The system allows the automatic and accurate detection of risk situations before starting a route.

- Researchers from the Centre for Image and Multimedia Technology of the UPC (CITM) and from the DiCode research group (Digital Culture and Creative Technologies Research Group) of the Universitat Politècnica de Catalunya – BarcelonaTech (UPC) have worked on the project The Eyes of History, an initiative of the Catalan Agency for Cultural Heritage that offers an immersive view of Catalan cultural heritage. It is especially aimed at the first and second cycles of secondary education and was created to bring heritage into the classroom. Its goal is to bring the history and monuments of Catalonia closer in a vivid and innovative way, using tools such as virtual reality and new museographic narratives.